In recent years, the development of computer processors has accelerated unprecedentedly. Every year, companies start making processors with more precise technologies that reach 7nm. As far as processors are concerned, not only processor speed and manufacturing accuracy are the criteria that determine processor strength, there is also a cache.

For more information about processors and their specifications, you can review our previous articles:

- A comprehensive comparison of different laptop processors and differences, how do you choose the right processor for your computer and your usage?

- Everything you need to know about AMD processors, the complete guide to the company’s best value processors!

You may have heard about cache memory when you’ve browsed the specifications of any computer processor. Normally, we don’t pay much attention to cache, nor do they represent the most prominent specifications in corporate advertising for the processors you’re offering. But how important is the CPU cache, and how does it work?

What is the processor cache?

Simply put, the cache is just a very fast type of storage memory. You know, there are multiple types of storage in the computer. There are basic and permanent storage devices such as HDD or SSD disks, which store the bulk of the data such as the operating system and all the programs inside.

Also, he wants ram, known as RAM, which is much faster than the basic storage disks and stores data inside it temporarily rather than permanently.

Finally, the CPU has faster and faster memory units called caches.

You can review our articles on ram storage disks and caches:

- Everything you need to know about RAM, what are they and what are the meanings of the terms associated with them?

- SSDs, which two types are best for you? PCIe or SATA? What’s the difference between them?

When we try to categorize the types of memories in the computer based on their speeds, we will find the storage memory at the top of the list, which is the fastest of them and is part of the CPU where the various processing of orders and calculations occur.

A cache is static RAM, but compared to the computer’s dynamic synchronous ram (SDRAM), it is much faster, and can keep data without having to constantly update it, making it ideal for use as a CPU cache.

How does the cache work?

You know, different computer programs are designed as a set of code, and this code is run by the CPU. When you run a program, these software learnings must make their way from the basic volume of the storage disk to the processor. But how does this process work?

The code is initially loaded and sent from the storage disk to the RAM, and then sent to the CPU to execute it. These days, processors are able to execute a huge number of code per second. But to take full advantage of its power, the CPU needs to access high-speed memory. RAM is not too fast to keep up with the processor in providing it with code. Hence the need for faster memory and here comes the role of cache.

The memory controller takes data from the ram and sends it to the cache. Depending on the CPU on your computer, this console can be either on the North Bridge chipset on the motherboard or within the CPU itself.

The cache then executes the code sequentially within the CPU and very quickly can keep up with the processor and provide it with the code sequentially to execute it. through a precise hierarchy.

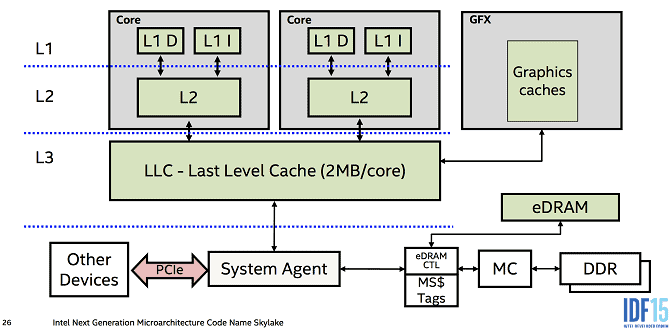

Cache levels: L1, L2 and L3

The CPU cache is divided into three main levels: L1, L2, and L3. They are arranged according to higher speed and lower capacity. The faster the memory, the more it will be at the expense of its capacity.

The L1 (Level 1) cache is the fastest memory inside the computer. For priority access, the L1 cache contains the data the CPU needs while completing a specific task.

The L1 cache usually has a capacity of up to 256 KB. However, some powerful CPUs have a capacity of approximately 1 MB. Some server processor chips (such as Intel Xeon CPU) contain 1-2 MB of L1 cache.

The L1 cache is usually divided into two parts, the first is the help cache and the second is the data cache. The help cache deals with information about the process that the CPU should perform, while the data cache contains the data on which the operation will be performed.

The L2 (Level 2) cache is slower than the L1 cache, but larger in capacity. Its capacity usually ranges from 256KB to 8MB, but new and powerful CPUs have more capacity. The L2 cache contains data that is likely to be accessed by the CPU for the following code in the execution. In most modern CPUs, the L1 and L2 caches are located on the inside of the CPU itself.

The L3 (Level 3) cache is the largest cache and is slower. Its capacity can range from 4 MB to 50 MB.

What does Cache Hit or Cache Miss mean? What does Latency mean?

Data flows from RAM to the L3 cache, then L2 and finally L1. When the processor searches for data to perform an operation, it first tries to find it in the cache. If the CPU is able to find it, say in this case that the cache has hit the Cache Hit target. If the processing unit does not find the data in the cache smelter, you will try to access it from the main memory. In this case we say we lost target Cache Miss.

The cache is designed to speed up the process of transferring information back and forth between the main memory and the CPU. Of course, L1 has the lowest transfer time being the fastest and closest to the processor, and the L3 memory has the highest. Arrival or transfer time increases a lot when there is a loss of target. This is because the CPU will have to get data from the main memory.

As computers get faster and more sophisticated, we’re seeing a decrease in latency in modern devices. We now have low-generation RAM (DDR4) and have high-speed SSDs with low access times as a primary store, both of which significantly reduce total latency.

In the past, cache designs put the L2 and L3 cache out of the CPU, which had a negative impact on latency. As a result, the cache can be placed closer to the CPU without worrying about the space factor and this significantly reduces the latency.

The future of caching repeaters

The cache design process continues to evolve and over time it becomes cheaper, faster and more compact. Intel and AMD have provided a lot of processors with tremendous developments in stock storage, and the most prominent of the two companies are Intel’s L4 cache tests.

There is a lot of work going on in this area. The task of reducing memory transition time may still be the bulk of the development process. Companies are constantly developing them and the future looks really promising.

إترك رد